Posted by Crime Tech Solutions

Posted by Crime Tech Solutions

The following article was published just over a year ago HERE by Dr. Laura Wyckoff, a Fellow at Bureau of Justice Assistance. We think it is worth exploring:

“Focusing resources on high-crime places, high-rate offenders, and repeat victims can help police effectively reduce crime in their communities. Doing so reinforces the notion that the application of data-driven strategies, such as hotspots policing, problem-oriented policing, and intelligence-led policing, work. Police must know when, where, and how to focus limited resources, as well as how to evaluate the effectiveness of their strategies. Sound crime analysis is paramount to this success.

What is crime analysis exactly? Crime analysis is not simply crime counts or the change in crime counts—that is just information about crime and not an analysis of crime. Crime analysis is a deep examination of the relationships between the different criminogenic factors (e.g., time, place, socio-demographics) surrounding crime or disorder that helps us understand why it occurs. Sound crime analysis diagnoses problems so a response may be tailored to cure it, or reduce the frequency and severity of such problems.

Data-driven policing and associated crime analysis are still in their infancy and are not typically integrated into the organizational culture as well as traditional policing strategies. Many agencies are still not aware of the advantages of an effective crime analysis unit, and others may not have the resources or knowledge to effectively integrate one. Of those that do employ crime analysis, many may not fully understand or accept this approach, or use it to its potential.

Additionally, police command staff typically are not analysts, so they may be unaware of how to guide this work to provide “actionable” crime analysis products that can be helpful for crime reduction efforts. At the same time, analysts are usually not police officers and may not be aware of how police respond to crime problems (both tactically and strategically), or what types of products will be most useful.

To be more effective at combating crime using data-driven strategies, we need to overcome these barriers and knowledge gaps. That is why the Bureau of Justice Assistance (BJA) established the Crime Analysis on Demand initiative. This initiative has a number of training and technical assistance opportunities focused on increasing crime analysis capacity in agencies across the nation. BJA’s National Training and Technical Assistance Center (NTTAC) is providing police agencies access to crime analysis experts that provide recommendations, training, and technical assistance to help agencies improve their application of crime analysis.

Additionally, the Police Foundation’s recent Crime Mapping and Analysis News publication provides a synopsis of the different services offered through this initiative. Other resources for crime analysis can be found on the International Association of Crime Analysts and the International Association of Law Enforcement Intelligence Analysts’ web sites.”

Tag Archives: Doug Wood

IBM Crime Analytics: Missing the mark? CTS Hits the bullseye!

Posted by Crime Tech Solutions – Your source for analytics in the fight against crime and fraud.

Posted by Crime Tech Solutions – Your source for analytics in the fight against crime and fraud.

September 7, 2015. IBM announced this week a major update to its IBM i2 Safer Planet intelligence portfolio that includes a major overhaul of the widely used Analyst’s Notebook product. The product, which has become increasingly abandoned by its user base over the past five years, is now being positioned as ‘slicker‘ than previous versions.

IBM suggests that the new version scales from one to 1,000 users and can ingest petabytes of information to visualize. (A single petabyte roughly translates to 20,000,000 four-drawer filing cabinets completely filled with text).

That’s a lot of data. Seems to me that analysts are already inundated with data… now they need more?

This all begs the question: “Where is IBM headed with this product?”

The evidence seems to point to the fact that IBM wants this suite of products to compete head-to-head with money-raising machine and media darling Palantir Technologies. If I’m IBM, that makes sense. Palantir has been eating Big Blue’s lunch for a few years now, particularly at the lucrative US Federal market level. Worse yet, for IBM i2, is the recent news of a new competitor with even more powerful technology.

If I’m a crime or fraud analyst, however, I have to view this as IBM moving further and further away from my reality.

The reality? Nobody has ever yelled “Help! I’ve been robbed. Call the petabytes of ‘slick’ data!” No, this tiring ‘big data’ discussion is not really part of the day to day work for the vast majority of analysts. Smart people using appropriate data with intuitive and flexible crime technology solutions… that’s the reality for most of us.

So, as IBM moves their market-leading tool higher and higher into the stratosphere, where does the industry turn for more practical desktop solutions with realistic pricing? For more and more customers around the world, the answer is a crime and fraud link analytics tool from Crime Tech Solutions.

No, it won’t ingest 20,000,000 four-drawer filing cabinets of data, and it’s more ‘efficient‘ than ‘slick‘. Still, the product has been around for decades as a strong competitor to Analysts Notebook, and is well-supported by a network of strategic partners around the world. Importantly, it is the only American made and supported alternative. Period. It’s also, seemingly, the last man standing in the market for efficient and cost-effective tools that can be used by real people doing their real jobs.

Is "Minority Report" pure fiction?

Posted by Douglas Wood.

Posted by Douglas Wood.

Journalist Raj Shekhar had an interesting article in the Times of India this week.

It’s like PreCrime, only four decades early. The “predictive policing” system seen in the Tom Cruise blockbuster Minority Report is now taking shape in Delhi. But instead of the three slime-immersed psychic “Precogs” that system relied on, Delhi Police’s crime prediction will be based on cold, hard data.

Once Enterprise Information Integration Solution or ‘EI2S’—a system that puts petabytes of information from more than a dozen crime databases at police staff’s fingertips—is ready, Delhi Police will be able to implement its ‘Crime Forecast’ plan to predict when and where criminals will strike.

The technology is not as fanciful as it seems at first and is already being tried out in many important cities, including New York, Los Angeles, London and Berlin. Officers associated with the plan say the software will analyze police data for patterns, compare it with other data from jails, courts and other crime-fighting agencies, and alert police to the likely threats. Data will be available not only on the suspects but also their likely victims.

A global tender has been floated for the project and Delhi Police is in talks with various firms for the technology.

According to the article, the system can help pre-empt many situations. For example, a violent clash between two gangs. It can identify individuals who are likely to join gangs or take to crime in an area based on the analyses of their behaviour and network. It can also curb domestic violence by identifying a pattern and predicting the next attack, the article said.

It all boils down to spotting patterns in mountains of data using tremendous computing power. A police document about the plan states that investigators should be able to perform crime series identification, crime trend identification, hot spot analysis and general analysis of criminal profiles. Link analysis will help spot common indicators of a crime by establishing associations and non obvious relationships between entities.

Using neighbourhood analysis, police will be able to understand crime events and the circumstances behind them in a small area as all the crime activity in a neighbourhood will be available for analysis. Criminal cases will be classified into multiple categories to understand what types of crime an area is prone to and the measures needed to curb them. Classification will be done through profiles of victims, suspects, localities and the modus operandi.

Another technique, called proximity analysis, will provide information about criminals, victims, witnesses and other people who are or were within a certain distance of the crime scene. By analyzing demographic and social trends, investigators will be able to understand the changes that have taken place in an area and their impact on criminality.

Network analysis will also be a part of this project to identify the important characteristics and functions of individuals within and outside a network, the network’s strengths and weaknesses and its financial and communication data.

While the system could help fight crime and rid Delhi of its ‘crime capital’ tag, it is bound to raise concerns over privacy and abuse as no predictive system can be foolproof.

Swoop 'n Squat, Army strong, and major case management: This week's Crime Technology headlines:

Posted by Douglas Wood, Editor.

Swoop and Squat…

http://www.propertycasualty360.com/2015/03/09/swoop-squat-beware-of-these-insurance-fraudsters

Army strong analytics…

http://www.military.com/daily-news/2015/03/27/army-improves-systems-testing-to-deliver-more-capability-to-figh.html

Investigative Case Management…

http://www.mvariety.com/cnmi/cnmi-news/local/75212-commonwealth-bureau-of-investigation-has-a-new-building

WorksafeBC introduces new Major Case Management (MCM) protocols…

http://www.workerscompensation.com/compnewsnetwork/workers-comp-blogwire/20898-worksafe-bc-posts-update-on-safety-review-plan.html

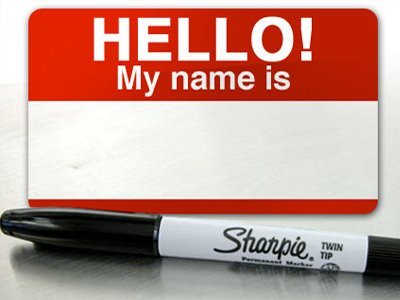

The Name Game Fraud

Posted by Douglas Wood, Editor. Alright everybody, let’s play a game. The name game!

Posted by Douglas Wood, Editor. Alright everybody, let’s play a game. The name game!

“Shirley, Shirley bo Birley. Bonana fanna fo Firley. Fee fy mo Mirley. Shirley!” No, not THAT name game. (Admit it… you used to love singing the “Chuck” version, though.)

The name game I’m referring to is slightly more sinister, and relates to the criminal intent to deceive others for gain by slightly misrepresenting attributes in order to circumvent fraud detection techniques. Pretty much anywhere money, goods, or services are dispensed, folks play the name game.

Utilities, Insurance, Medicaid, retail, FEMA. You name it.

Several years ago, I helped a large online insurance provider determine the extent to which they were offering insurance policies to corporations and individuals with whom they specifically did not want to do business. Here’s what the insurer knew:

- They had standard application questions designed to both determine the insurance quote AND to ensure that they were not doing business with undesirables. These questions included things such as full name, address, telephone number, date of birth, etc… but also questions related to the insured property. “Do you live within a mile of a fire station?”, Does your home have smoke detectors?”, and “Is your house made of matchsticks?”

- On top of the questions, the insurer had a list of entities with whom the knew they did not want to do business for one reason or another. Perhaps Charlie Cheat had some previously questionable claims… he would have been on their list.

In order to circumvent the fraud prevention techniques, of course, the unscrupulous types figured out how to mislead the insurer just enough so that the policy was approved. Once approved, the car would immediately be stolen. The house would immediately burn down, etc.

The most common way by which the fraudsters misled the insurers was a combination of The Name Game and modifying answers until the screening system was fooled. Through a combination of investigative case management and link analysis software, I went back and looked at several months of historical data and found some amazing techniques used by the criminals. Specifically, I found one customer who made 19 separate online applications – each time changing just one attribute or answer slightly – until the policy was issued. Within a week of the policy issue, a claim was made. You can use your imagination to determine if it was a legitimate claim. 😀

This customer, Charlie Cheat (obviously not his real name), first used his real name, address, telephone number, and date of birth… and answered all of the screening questions honestly. Because he did not meet the criteria AND appeared on an internal watch list for having suspicious previous claims, his application was automatically denied. Then he had his wife, Cheri Cheat, complete the application in hopes that the system would see a different name and approve the policy. Thirdly, he modified his name to Charlie Cheat, Chuck E. Cheat, and so on. Still no go. His address went from 123 Fifth Street to 123-A 5th Street. You get the picture.

Then he began to modify answers to the screening questions. All of a sudden, he DID live within a mile of a fire station… and his house was NOT made of matchsticks… and was NOT located next door to a fireworks factory. After almost two dozen attempts, he was finally issued the policy under a slightly revised name, a tweak in his address, and some less-than-truthful answers on the screening page. By investing in powerful investigative case management software with link analysis and fuzzy matching this insurer was able to dramatically decrease the number of policies issued to known fraudsters or otherwise ineligible entities.

Every time a new policy is applied for, the system analyzes the data against previous responses and internal watch lists in real time. In other words, Charlie and Cheri just found it a lot more difficult to rip this insurer off. These same situations occur in other arenas, costing us millions annually in increased taxes and prices. So, what happened to the Cheats after singing the name game?

Let’s just say that after receiving a letter from the insurer, Charlie and Cheri started singing a different tune altogether.

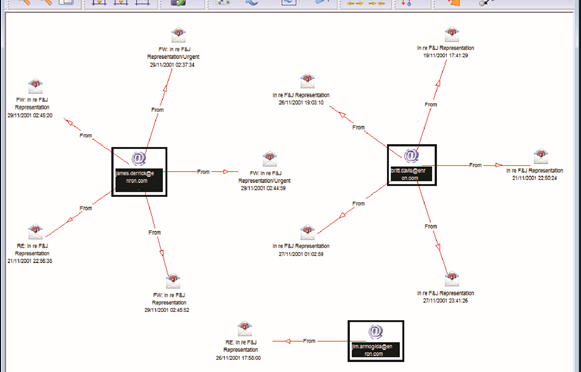

Using Link Analysis to untangle fraud webs

Posted by Douglas Wood, Editor.

NOTE: This article originally appeared HERE by Jane Antonio. I think it’s a great read…

Link analysis has become an important technique for discovering hidden relationships involved in healthcare fraud. An excellent online source, FierceHealthPayer:AntiFraud, recently spoke to Vincent Boyd Bryant about the value of this tool for payer special investigations units.

A former biometric scientist for the U.S. Department of Defense, Bryant has 30 years of experience in law enforcement and intelligence analysis. He’s an internationally-experienced investigations and forensics expert who’s worked for a leading health insurer on government business fraud and abuse cases.

How does interactive link analysis help insurers prevent healthcare fraud? Can you share an example of how the tool works?

One thing criminals do best is hide pots of money in different places. As a small criminal operation becomes successful, it will often expand its revenue streams through associated businesses. Link analysis is about trying to figure out where all those different baskets of revenue may be. Insurers are drowning in a sea of theft. Here’s where link analysis becomes beneficial. Once insurers discover a small basket of money lost to a criminal enterprise, then serious research needs to go into finding out who owns the company, who they’re associated with, what kinds of business they’re doing and if there are claims associated with it.

You may find a clinic, for example, connected to and working near a pharmacy, a medical equipment supplier, a home healthcare services provider and a construction company. Diving into those companies and what they do, you find that they’re serving older patients for whom multiple claims from many providers exist. The construction company may be building wheelchair ramps on homes. And you may find that the providers are claiming payment for dead people. Overall, using this tool requires significant curiosity and a willingness to look beyond the obvious.

Any investigation consists of aggregating facts, generating impressions and creating a theory about what happened. Then you work to confirm or disconfirm your theory. It’s important to have tools that let you take large masses of facts and visualize them in ways that cue you to look closer.

Let’s say you investigate a large medical practice and interview “Doctor Jones.” The day after the interview, you learn through link analysis that he transferred $11 million from his primary bank account to the Cayman Islands. And in looking at Dr. Jones’ phone records, you see he called six people, each of whom was the head of another individual practice on whose board Dr. Jones sits. Now the investigation expands, since the timing of those phone calls was contemporaneous to the money taking flight.

Why are tight clusters of similar entities possible indicators of fraud, waste or abuse?

Bryant: When you find a business engaged in dishonest practices and see its different relationships with providers working out of the same building, this gives rise to reasonable suspicion. The case merits a closer look. Examining claims and talking to members served by those companies will give you an indication of how legitimate the operation is.

What are the advantages of link analysis to payer special investigation units, and how are SIUs using its results?

Bryant: Link analysis can define relationships through data insurers haven’t always had, data that traditionally belonged to law enforcement.

Link analysis results in a visual reference that can take many forms: It can look like a family tree, an organizational chart or a time line. This reference helps investigators assess large masses of data for clustering and helps them arrive at a conclusion more rapidly.

Using link analysis, an investigator can dump in large amounts of data–such as patient lists from multiple practices–and see who’s serving the same patient. This can identify those who doctor shop for pain medication, for example. Link analysis can chart where this person was and when, showing the total amount of medication prescribed and giving you an idea of how the person is operating.

What types of data does link analysis integrate?

Bryant: Any type of data that can be sorted and tied together can be loaded into the tool. Examples include telephone records, addresses, vehicle information, corporate records that list individuals serving on boards and banking and financial information. Larger supporting documents can be loaded and linked to the charts, making cases easier to present to a jury.

Linked analysis can pull in data from state government agencies, county tax records or police records from state departments of correction and make those available in one bucket. In most cases, this is more efficient than the hours of labor needed to dig up these types of public records through site visits.

Is there anything else payers should know about link analysis that wasn’t covered in the above questions?

Bryant: The critical thing is remembering that you don’t know what you don’t know. If a provider or member is stealing from the plan in what looks like dribs and drabs, insurers may never discover the true extent of the losses. But if–as a part of any fraud allegation that arises–you look at what and who is associated with the subject of the complaint, what started as a $100,000 questionable claims allegation can expose millions of dollars in inappropriate billings spread across different entities.

Asking data questions

Posted by Douglas Wood, Editor. http://www.linkedin.com/in/dougwood.

A brief read and good perspective from my friend Chris Westphal of Raytheon. The article is by Anna Forrester of ExecutiveGov.com.

Federal managers should invest in technology that would help them extract insights from data and base their investment decision on the specific problems and information they want to learn and solve, Federal Times reported Friday.

Rutrell Yasin writes that the above managers should follow three steps as they seek to compress the high volume of data their agencies encounter in daily tasks and to derive value from them.

According to Shawn Kingsberry, chief information officer for the Recovery Accountability and Transparency Board, federal managers should first determine the questions they need to ask of data then create a profile for the customer or target audience.

Next, they should locate the data and their sources then correspond with those sources to determine quality of data, the report said. “Managers need to know if the data is in a federal system of records that gives the agency terms of use or is it public data,” writes Yasin.

Finally, they should consider the potential impact of the data, the insights and resulting technology investments on the agency.

Yasin reports that the Recovery Accountability and Transparency Board uses data analytics tools from Microsoft, SAP and SAS and link analysis tools from Palantir Technologies.

According to Chris Westphal, director of analytics technology at Raytheon, organizations should invest in a platform that gathers data from separate sources into a single data repository with analytics tools.

Yasin adds that agencies should also appoint a chief data officer and data scientists or architects to assist the CIO and CISO on these areas.

Perhaps a nice change at NICE Actimize?

Posted by Douglas Wood, Editor. http://www.linkedin.com/in/dougwood

Though not publicly released, news out of NICE Actimize is that long-time CEO Amir Orad is leaving the company effective May 1. Indicative of the ‘what a small world this is’ nature of the financial crimes technology marketplace, former Pegasystems co-founder and head of Americas for BAE Systems Detica, Joe Friscia, will be taking over the helm at that time.

Mr. Orad led NICE Actimize’s product and strategy functions prior to his five year tenure as CEO. During his tenure, he scaled the business size over six-fold. He is also a founding board member at BillGuard the venture backed personal finance analytics and security mobile app company.

Prior to Actimize, Orad was co-founder and CMO of Cyota a cyber security and payment fraud cloud company protecting over 100 million online users, acquired by RSA Security for $145M. Following the acquisition, he was VP Marketing at RSA.

I’ve known both Amir and Joe for several years, and have a tremendous amount of respect for both gentlemen. While it’s sad to see Amir leave the organization, I know that his rather large shoes will be more than adequately filled by Mr. Friscia.

Joe’s background is well-suited to this new position, and all of us here at FightFinancialCrimes wish him well. Joe joined Detica when BAE Systems acquired Norkom Technologies in early 2011, where he served as General Manager and Executive Vice President of the Americas. Joe led the rapid growth of Norkom in the Americas, with direct responsibility for sales, revenue and profit as well as managing multi-discipline teams based in North America. Prior to Norkom, Joe helped start Pegasystems Inc in 1984, a successful Business Process Management software company that went public in 1996.

Best of luck to Amir in his new ventures, and to Joe as he guides Actimize into it’s next phase.

Part Two: Major Investigation Analytics – Big Data and Smart Data

Posted by Douglas Wood, Editor.

As regular readers of this blog know, I spend a great deal of time writing about the use of technology in the fight against crime – financial and otherwise. In Part One of this series, I overviewed the concept of Major Investigation Analytics and Investigative Case Management.

I also overviewed the major providers of this software technology – Palantir Technologies, Case Closed Software, and Visallo. The latter two recently became strategic partners, in fact.

The major case for major case management (pun intended) was driven home at a recent crime and investigation conference in New York. Full Disclosure: I attended the conference for educational purposes as part of my role at Crime Tech Weekly. Throughout the three day conference, speaker after speaker talked about making sense of data. I think if I’d have heard the term ‘big data’ one more time I’d have gone insane. Nevertheless, that was the topic du jour as you can imagine, and the 3 V’s of big data – volume, variety, and velocity – remain a front and center topic for the vendor community serving the investigation market.

According to one report, 96% of everything we do in life – personal or at work – generates data. That statement probably best sums up how big ‘big data’ is. Unfortunately, there was very little discussion about how big data can help investigate major crimes. There was a lot of talk about analytics, for sure, but there was a noticeable lack of ‘meat on the bone’ when it came to major investigation analytics.

Nobody has ever yelled out “Help, I’ve been attacked. Someone call the big data!”. That’s because big data doesn’t, in and by itself, do anything. Once you can move ‘big data’ into ‘smart data’, however, you have an opportunity to investigate and adjudicate crime. To me, smart data (in the context of investigations) is a subset of an investigator’s ability to:

- Quickly triage a threat (or case) using only those bits of data that are most immediately relevant

- Understand the larger scope of the crime through experience and crime analytics, and

- Manage that case through intelligence-led analytics and investigative case management, data sharing, link exploration, text analytics, and so on.

Connecting the dots, as they say. From an investigation perspective, however, connecting dots can be daunting. In the children’s game, there is a defined starting point and a set of rules. We simply need to follow the instructions and the puzzle is solved. Not so in the world of the investigator. The ‘dots’ are not as easy to find. It can be like looking for a needle in a haystack, but the needle is actually broken into pieces and spread across ten haystacks.

Big data brings those haystacks together, but only smart data finds the needles… and therein lies the true value of major investigation analytics.

Major Investigation Analytics – No longer M.I.A. (Part One)

Posted by Douglas Wood, Editor. http://www.linkedin.com/in/dougwood

Long before the terrorist strikes of 9/11 created a massive demand for risk and investigation technologies, there was the case of Paul Bernardo.

Paul Kenneth Bernardo was suspected of more than a dozen brutal sexual assaults in Scarborough, Canada, within the jurisdiction of the Ontario Provincial Police. As his attacks grew in frequency they also grew in brutality, to the point of several murders. Then just as police were closing in the attacks suddenly stopped. That is when the Ontario police knew they had a problem. Because their suspect was not in jail, they knew he had either died or fled to a location outside their jurisdiction to commit his crimes.

The events following Bernardo’s disappearance in Toronto and his eventual capture in St. Catharines, would ultimately lead to an intense 1995 investigation into police practices throughout the Province of Ontario, Canada. The investigation, headed by the late Justice Archie Campbell, showed glaring weaknesses in investigation management and information sharing between police districts.

Campbell studied the court and police documents for four months and then produced a scathing report that documented systemic jurisdictional turf wars among the police forces in Toronto and the surrounding regions investigating a string of nearly 20 brutal rapes in the Scarborough area of Toronto and the murders of two teenaged girls in the St. Catharines area. He concluded that the investigation “was a mess from beginning to end.”

Campbell went on to conclude that there was an “astounding and dangerous lack of co-operation between police forces” and a litany of errors, miscalculations and disputes. Among the Justice’s findings was a key recommendation that an investigative case management system was needed to:

- Record, organize, manage, analyze and follow up all investigative data

- Ensure all relevant information sources are applied to the investigation

- Recognize at an early stage any linked or associated incidents

- “Trigger” alerts to users of commonalities between incidents

- Embody an investigative methodology incorporating standardized procedures

Hundreds of vendors aligned to provide this newly mandated technology, and eventually a vendor was tasked with making it real with the Ontario Major Case Management (MCM) program. With that, a major leap in the evolution of investigation analytics had begun. Today, the market leaders include IBM i2, Case Closed Software, Palantir Technologies, and Visallo.

Recently, the Ottawa Citizen newspaper published an indepth article on the Ontario MCM system. I recommend reading it.

Investigation analytics and major case management

The components of major investigation analytics include: Threat Triage, Crime & Fraud Analytics, and Intelligence-Lead Investigative Case Management. Ontario’s MCM is an innovative approach to solving crimes and dealing with complex incidents using these components. All of Ontario’s police services use this major investigation analytics tool to investigate serious crimes – homicides, sexual assaults and abductions. It combines specialized police training and investigation techniques with specialized software systems. The software manages the vast amounts of information involved in investigations of serious crimes.

Major investigation analytics helps solve major cases by:

- Providing an efficient way to keep track of, sort and analyze huge amounts of information about a crime: notes, witness statements, door-to-door leads, names, locations, vehicles and phone numbers are examples of the types of information police collect

- Streamlining investigations

- Making it possible for police to see connections between cases so they can reduce the risk that serial offenders will avoid being caught

- Preventing crime and reducing the number of potential victims by catching offenders sooner.

See Part Two of this series here.